Consuming AWS EventBridge Events inside StackStorm

Amazon Web Services (AWS) recently launched a new product called Amazon EventBridge.

EventBridge has a lot of similarities to StackStorm, a popular open-source cross-domain event-driven infrastructure automation platform. In some ways, you could think of it as a very light weight and limited version of StackStorm as a service (SaaS).

In this blog post I will should you how you can extend StackStorm functionality by consuming thousands of different events which are available through Amazon EventsBridge.

Why?

First of all you might ask why you would want to do that.

StackStorm Exchange already offers many different packs which allows users to integrate with various popular projects and services (including AWS). In fact, StackStorm Exchange integration integration packs expose over 1500 different actions.

Even though StackStorm Exchange offers integration with many different products and services, those integrations are still limited, especially on the incoming events / triggers side.

Since event-driven automation is all about the events which can trigger various actions and business logic, the more events you have access to, the better.

Run a workflow which runs Ansible provision, creates a CloudFlare DNS record, adds new server to Nagios, adds server to the loadbalancer when a new EC2 instance is started? Check.

Honk your Tesla Model S horn when your satellite passes and establishes a contact with AWS Ground Station? Check.

Having access to many thousands of different events exposed through EventBridge opens up almost unlimited automation possibilities.

For a list of some of the events supported by EventsBridge, please refer to their documentation.

Consuming EventBridge Events Inside StackStorm

There are many possible ways to integrate StackStorm and EventBridge and consume EventBridge events inside StackStorm. Some more complex than others.

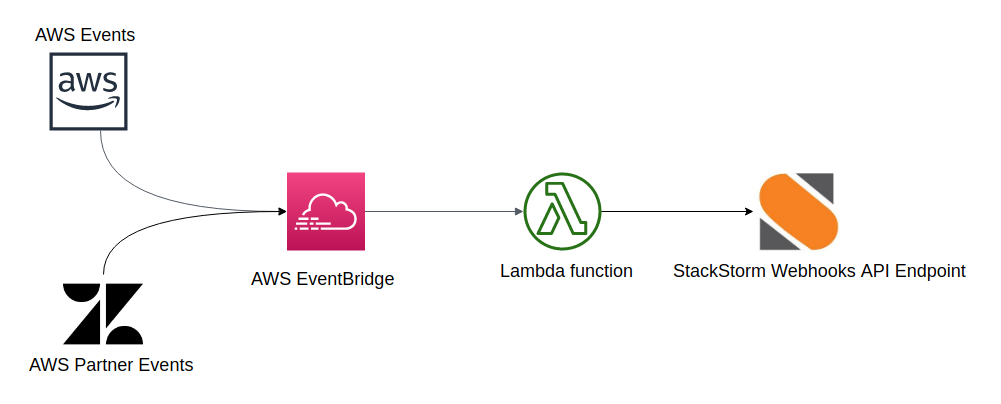

In this post, I will describe an approach which utilizes AWS Lambda function.

I decided to go with AWS Lambda approach because it’s simple and straightforward. It looks like this:

- Event is generated by AWS service or a partner SaaS product

- EventBridge rule matches an event and triggers AWS Lambda Function (rule target)

- AWS Lambda Function sends an event to StackStorm using StackStorm Webhooks API endpoint

1. Create StackStorm Rule Which Exposes a New Webhook

First we need to create a StackStorm rule which exposes a new eventbridge

webhook. This webhook will be available through

https://<example.com>/api/v1/webhooks/eventbridge URL.

wget https://gist.githubusercontent.com/Kami/204a8f676c0d1de39dc841b699054a68/raw/b3d63fd7749137da76fa35ca1c34b47fd574458d/write_eventbridge_data_to_file.yaml

st2 rule create write_eventbridge_data_to_file.yamlname: "write_eventbridge_data_to_file"

pack: "default"

description: "Test rule which writes AWS EventBridge event data to file."

enabled: true

trigger:

type: "core.st2.webhook"

parameters:

url: "eventbridge"

criteria:

trigger.body.detail.eventSource:

pattern: "ec2.amazonaws.com"

type: "equals"

trigger.body.detail.eventName:

pattern: "RunInstances"

type: "equals"

action:

ref: "core.local"

parameters:

cmd: "echo \"{{trigger.body}}\" >> ~/st2.webhook.out"You can have as many rules as you want with the same webhook URL parameter. This means you can utilize the same webhook endpoint to match as many different events and trigger as many different actions / workflows as you want.

In the criteria field we filter on events which correspond to new EC2

instance launches (eventName matches RunInstances and eventSource

matches ec2.amazonaws.com). StackStorm rule criteria comparison

operators are quite expressive so you can also get more creative than that.

As this is just an example, we simply write a body of the matched event to

a file on disk (/home/stanley/st2.webhook.out). In a real life scenario,

you would likely utilize Orquesta workflow which runs your complex or less

complex business logic.

This could involve steps and actions such as:

- Add new instance to the load-balancer

- Add new instance to your monitoring system

- Notify Slack channel new instance has been started

- Configure your firewall for the new instance

- Run Ansible provision on it

- etc.

2. Configure and Deploy AWS Lambda Function

Once your rule is configured, you need to configure and deploy AWS Lambda function.

You can find code for the Lambda Python function I wrote here - https://github.com/Kami/aws-lambda-event-to-stackstorm.

I decided to use Lambda Python environment, but the actual handler is very simple so I could easily use JavaScript and Node.js environment instead.

git clone https://github.com/Kami/aws-lambda-event-to-stackstorm.git

cd aws-lambda-event-to-stackstorm

# Install python-lambda package which takes care of creating and deploying

# Lambda bundle for your

pip install python-lambda

# Edit config.yaml file and make sure all the required environment variables

# are set - things such as StackStorm Webhook URL, API key, etc.

# vim config.yaml

# Deploy your Lambda function

# For that command to work, you need to have awscli package installed and

# configured on your system (pip install --upgrade --user awscli ; aws configure)

lambda deploy

# You can also test it locally by using the provided event.json sample event

lambda invokeYou can confirm that the function has been deployed by going to the AWS console or by running AWS CLI command:

aws lambda list-function

aws lambda get-function --function-name send_event_to_stackstormAnd you can verify that it’s running by tailing the function logs:

LAMBDA_FUNCTION_NAME="send_event_to_stackstorm"

LOG_STREAM_NAME=`aws logs describe-log-streams --log-group-name "/aws/lambda/${LAMBDA_FUNCTION_NAME}" --query logStreams[*].logStreamName | jq '.[0]' | xargs`

aws logs get-log-events --log-group-name "/aws/lambda/${LAMBDA_FUNCTION_NAME}" --log-stream-name "${LOG_STREAM_NAME}"2. Create AWS EventBridge Rule Which Runs Your Lambda Function

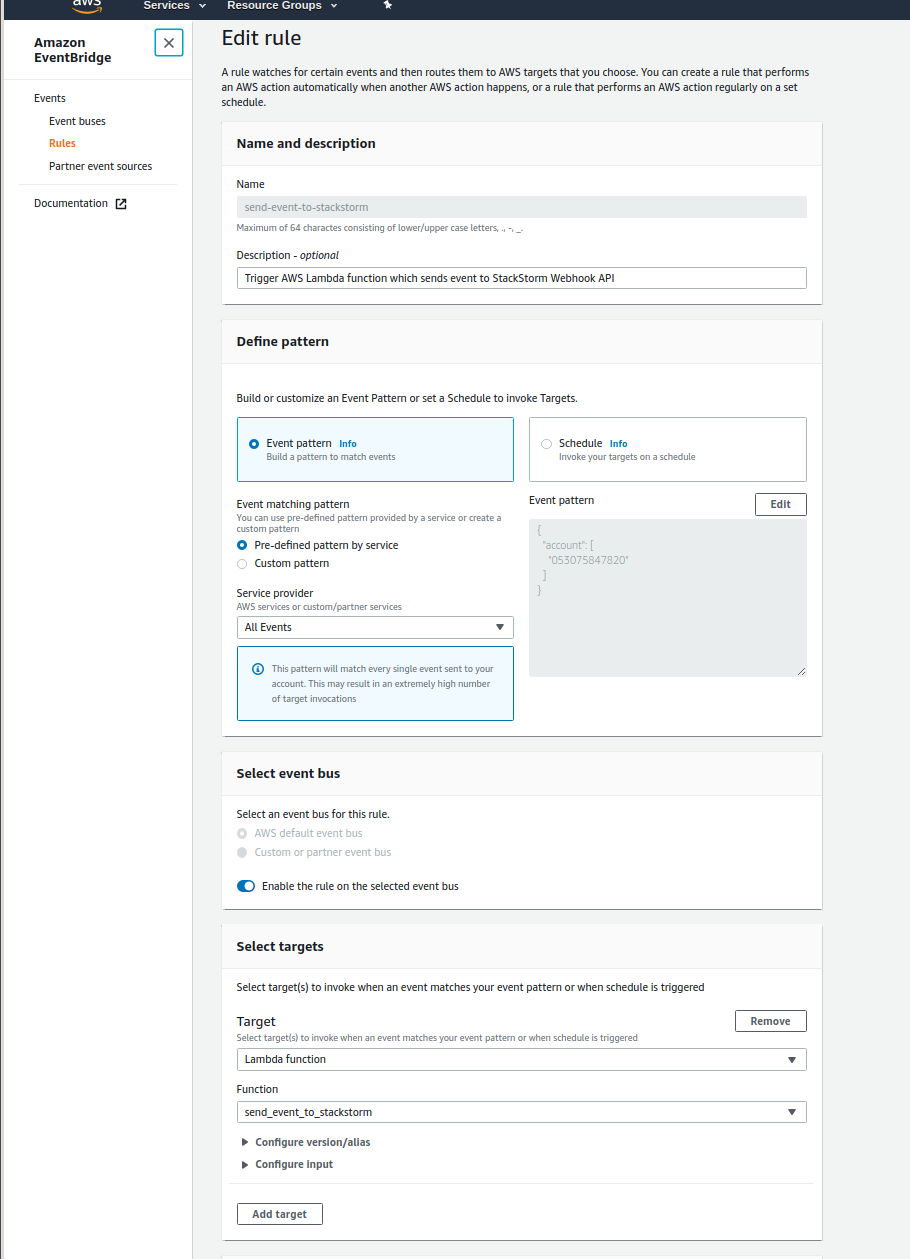

Now we need to create AWS EventBridge rule which will match the events and trigger AWS Lambda function.

As you can see in the screenshot above, I simply configured the rule to send every event to Lambda function.

This may be OK for testing, but for production usage, you should narrow this down to the actual events you are interested in. If you don’t, you might get surprised by your AWS Lambda bill - even on small AWS accounts, there are tons of events being being constantly generated by various services and account actions.

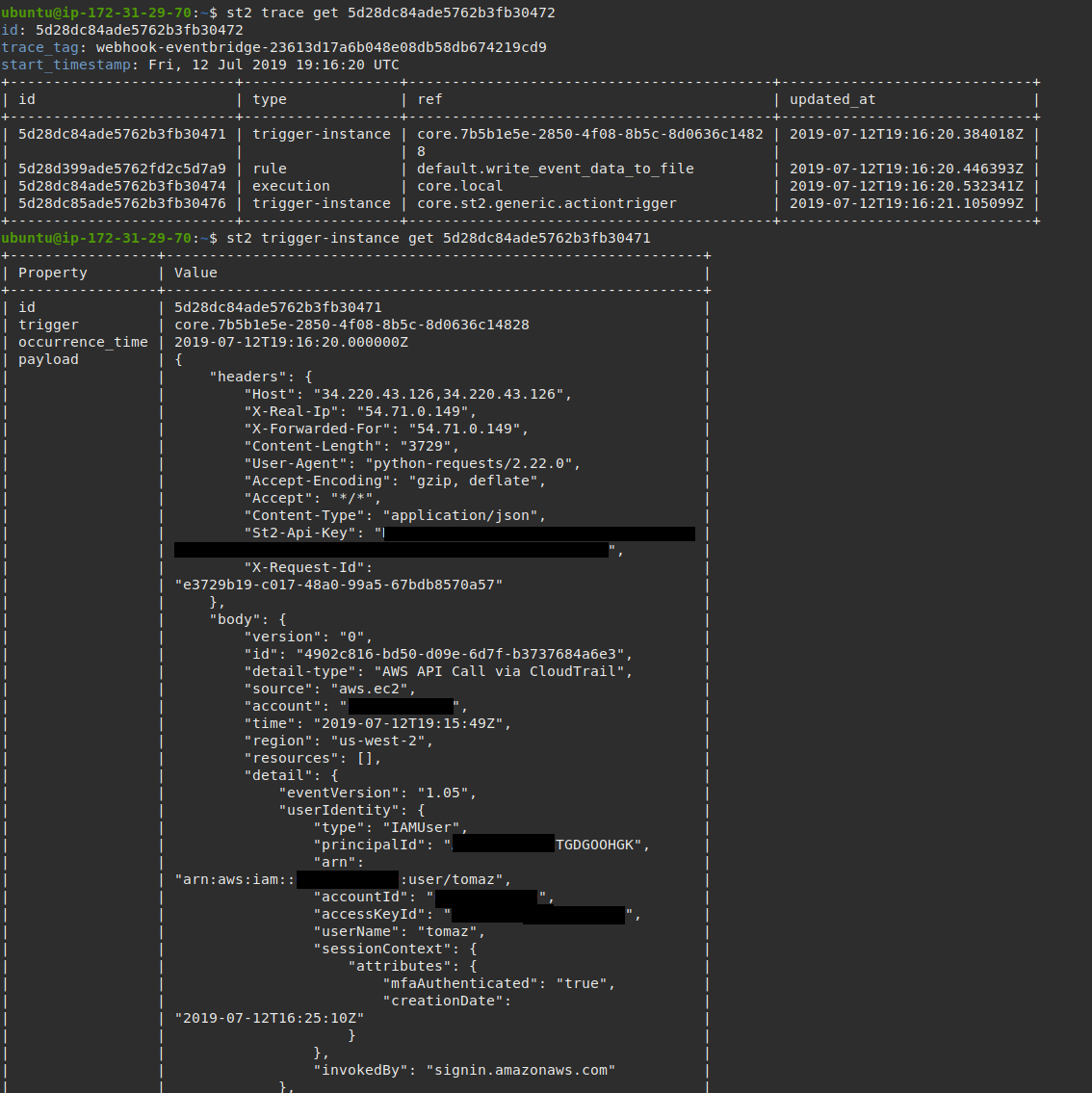

3. Monitor your StackStorm Instance For New AWS EventBridge Events

As soon as you configure and enable the rule, new AWS EventBridge events (trigger instances) should start flowing into your StackStorm deployment.

You can monitor for new instances using st2 trace list and

st2 trigger-instance list commands.

And as soon as a new EC2 instance is launched, your action which was defined in the StackStorm rule above will be executed.

Conclusion

This post showed how easy it is to consume AWS EventBridge events inside StackStorm and tie those two services together.

Gaining access to many thousand of different AWS and AWS partner events inside StackStorm opens up many new possibilities and allows you to apply cross-domain automation to many new situations.