Cassandra Anti-Patterns

Ljubljana Cassandra Meetup, March 2014

Agenda

- Who am I?

- My Experience with Cassandra

- Cassandra anti-patterns

- Delete heavy work-loads and queue like data sets

- Questions?

Who am I?

- Tomaz Muraus

- Long time Pythonista

- Apache Libcloud Committer & Project chair

- Previously: Cloudkick, Rackspace

- Currently: DivvyCloud

- Likes: Distributed systems, big data, open standards and systems, startups

- tomaz.me

- github.com/Kami

- @kamislo

My Experience with Cassandra

- ~4 years of hands-on experience with Cassandra

- Mostly Thrift and CQL 2, some CQL 3 experience

- Used versions from 0.6 to 2.0

- Operational experience and deployment on physical hardware and on cloud servers

- Multi region deployments

- Contributor to Node.js and Python client

- Cloudkick (time-series data), Rackspace Cloud Monitoring (primary data storage, time series data), Rackspace Service Registry (primary data storage), Email Analytics (time series data)

What is Cassandra?

- Distributed NoSQL data store

- Hybrid column oriented data model

- Inspired by Amazon's Dynamo paper

- Originally developed at Facebook to power inbox search feature (simple inverted index)

- Facebook open sourced it / threw code on Google Code

- Development picked over by guys at Rackspace

- Later on joined Apache Incubator and became independent Apache project

Cassandra Features

- Distributed - every node in the cluster is equal (no SPOF)

- Tunable replication

- Tunable consistency (eventual to strong consistency)

- Horizontal scalability

- Highly available and fault tolerant (depends on replication and consistency settings)

- Easy to use with addition of Cassandra Query Language (CQL)

- Easy to operate - single component / daemon (no need to configure, operate and run multiple different services)

- Allows you to define schema

Other similar data stores

- Hbase (until recently, name node in HDFS was a SPOF)

- Riak (IIRC, you still need to pay for wan-to-wan replication)

MongoDB- no, but it might be OK as a backend for your CMSRedis- again, it's apples vs oranges, even with recent work on Redis Cluster- There are a bunch of other very simple key-value stores which have similar distributed guarantees, but lack other features of Cassandra

Why use Cassandra instead of X?

- Maybe you shouldn't

- You should always use the simplest and the best tool to get the job done

- Cassandra may not always be that tool and that's a good thing! (being everything to everyone is a recipe for a disaster)

- You care about your data (looking at you MongoDB)

- You have a lot* of data

- You want your app to be HA

- You will grow (horizontal scalability)

Cassandra Anti-Patterns

- Those fall into different categories:

- Deployment

- Data model

- Access patterns

- Today we will focus on queue like datasets and delete heavy workloads

Quick dive into Service Registry

Service Registry is an API driven cloud service which allows you to keep track of your services and store configuration values in a centralized place and get notified when a value changes.

What is Service Registry?

- Three main groups of functionality

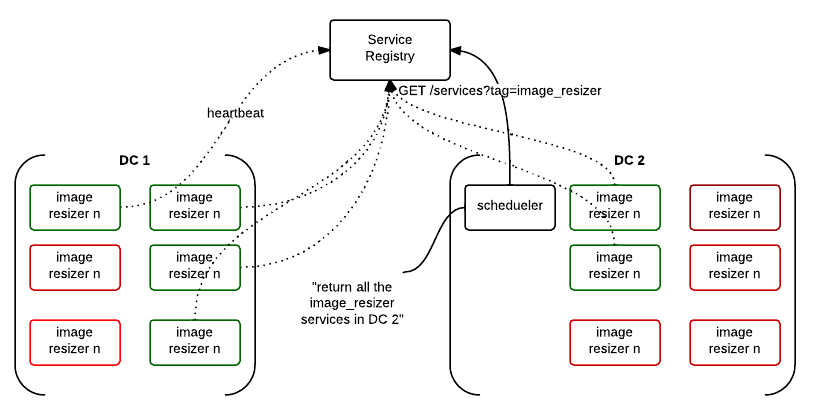

- Service Discovery

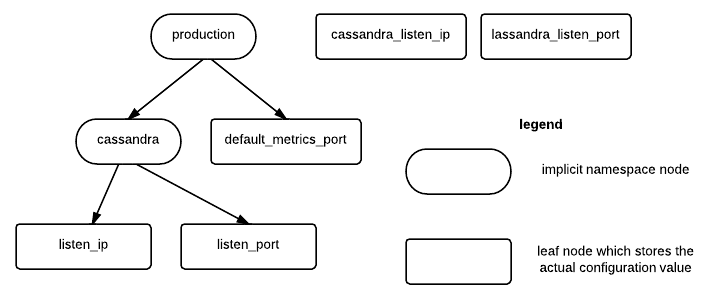

- Configuration Storage

- Platform for automation

Service Discovery

Configuration Storage

What is Service Registry?

- Focuses on applications / services not servers

- Small, focused service which does a couple of things well (small, modular utilities FTW!)

- Makes building large and highly available applications easier

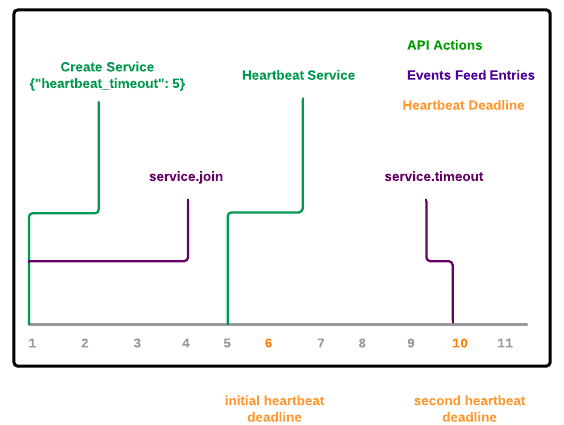

Heartbeating in Service Registry

- Heartbeating is letting us know that your service is still alive

- If we don't receive a heartbeat in a defined time interval, we treat service as dead / timed out

Heartbeating in Service Registry

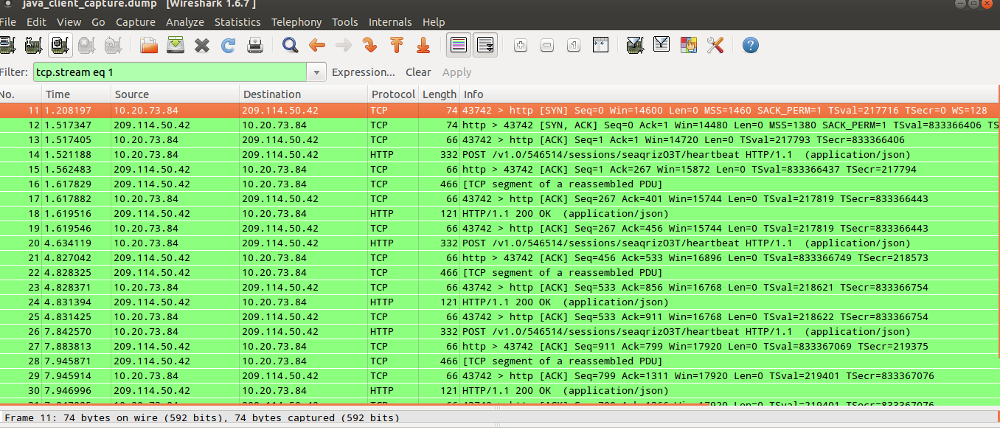

Heartbeating in Service Registry

How heartbeating works (high-level)

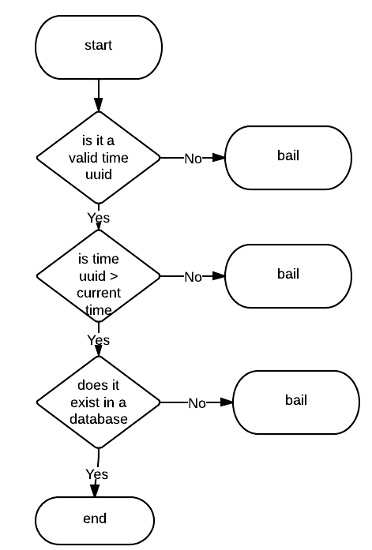

Validating the token (high-level)

Example heartbeat request

{

"token": "25e4e1d0-c18e-11e2-98ba-7426829cefcf"

}

- Token is a opaque string to the end user

- For us, it's a Time UUID which contains a current heartbeat deadline

Implementing Service Registry on top of Cassandra

- Why Cassandra?

- We know it well

- Good distributed architecture and capabilities

- Easy to operate

- Matches our service HA and consistency requirements

Things to know about Cassandra

- Because of the underlying storage format* and the way deletes works, it's usually** a bad fit for queue like and delete heavy work-loads.

- * Log structured append only files (and sstables), not a unique problem to Cassandra

- ** Depends on data model, compaction strategy and access patterns, there are ways to work around it

Distributed deletes and tombstones

- When a user deletes a column, it's not actually deleted from the underlying storage. It's just marked to be deleted and converted to a tombstone

- Tombstones eventually get deleted during a minor or a major compaction*

- * It's complex and depends on multiple factors (cassandra version, is the deleted column located in multiple sstable files, etc.)

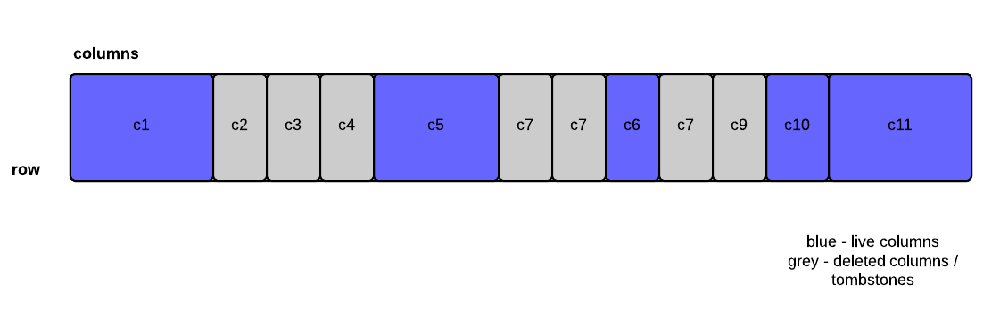

How do tombstones affect range query reads?

- Gimme a single column (e.g. c5) - no biggie, uses bloom filter and pointer to a offset in a sstable file, skips tombstones

- Gimme all the columns between c5 and c10 (inclusive) - houston, we have a problem (need to do late filtering)!

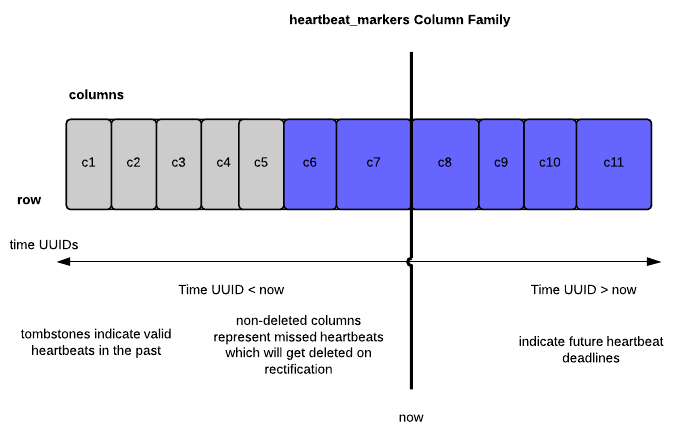

Service Registry, heartbeating and data model

CREATE COLUMNFAMILY heartbeat_markers ( KEY ascii PRIMARY KEY ) WITH comparator=uuid; ALTER COLUMNFAMILY heartbeat_markers WITH gc_grace_seconds=108000; ALTER COLUMNFAMILY heartbeat_markers WITH caching='keys_only';

- Row key is account id

- Column name is a Time UUID

- Column value contains a service id

How it works?

- On heartbeat, insert a new column (future deadline)

- On rectification, delete all the columns from START_TIME to NOW

- Rectification - repair on read (similar to Cassandra)

How it works?

How we avoided the piling tombstones problem?

- Cassandra 1.2.x has improved support for tombstone removal during minor compactions

- Low gc_grace_seconds (default is 10 days)

- Small range queries

- Rectifier service

- Sharding across multiple rows

Other possible ways to mitigate / avoid this problem

- Use a data store which is more appropriate for your access patterns / type of data you store

- Short lived column families - periodically re-create column family (TRUNCATE + hard remove data file on disk)